| December 2010 Index | Home Page |

Editor’s Note: Learning Management Systems (LMS) allow websites to provide much more than syllabus and course materials; they integrate a wide range of learning resources including library and audiovisual, learning laboratories, interactive multimedia and simulators, databases, communication tools such as email, threaded discussions, blogs and wikis, and social media such as MySpace and Facebook. LMS facilitate advisement, admission, registration, financial aid, counseling, testing and evaluation. The following research investigates doctoral program websites in Educational Technology in their present stages of evolution.

Doctoral Program Websites in Educational Technology:

An Investigation of the Information Availability and Accessibility

Albert D. Ritzhaupt, William Shore, Steven Eakins, Enrique Caliz, Lucien Millette

USA

Abstract

This research identifies the type of information prospective doctoral students in educational technology seek; examine the extent to which the discipline’s current doctoral program websites include relevant information, and the degree to which the websites are accessible to disabled students. Fifty doctoral websites in educational technology were examined. Results show variability in the type of content available and show that many sites have elements that are inaccessible to disabled students. Recommendations are provided.

Introduction

The Internet and its related software and systems have extensively changed the way individuals interact with the world. The Internet is the fastest growing communications medium in our history (Bell & Tang, 2000), and as a consequence, the way in which higher education institutions interact with prospective students has also fundamentally changed. In particular, the web presence of an institution has been a topic of investigation in many disciplines and from many perspectives (Poock & Lefond, 2001; Cavanaugh & Cavanaugh, 2002; Acquaro, 2004; Hans, 2001; Poock & Andrews, 2006; Sibuma, Boxer, Acquaro, & Creus, 2005). A university or college website may be the most important marketing tool to solicit prospective students in the information age, and as noted by Abrahamson (2001), strong evidence suggests that modern students use the information to inform decision-making.

Perhaps the most authoritative work on college and university websites was conducted by Poock (2001, 2003, & 2006) and his colleagues. Poock’s research has systematically investigated the characteristics of effective websites in the context of higher education and surveyed prospective undergraduate (2001, 2006) and graduate students (2003) to determine which characteristics were most important to the target audience. The research investigated the following relevant factors from a student perspective: content, site architecture and organization, ease of navigation, download speeds, focus on audience, distinctiveness of the website, and the impact of graphics. Overall, the two most important characteristics were the website’s content and site architecture/organization (Poock & Lefond, 2001).

Another important area of discourse has been the accessibility of college or university websites (Acquaro, 2004; Sibuma et. al., 2005). Because many colleges or universities are public entities, their websites should conform to the requirements stated in Section 508, a law amended by congress in 1998 requiring that federal entities must make their web sites accessible to people with disabilities (Section 508, 2008). Accessibility has become such an important topic, the World Wide Web Consortium (W3C) has developed the Web Accessibility Initiative (WAI) to assist developers in making websites accessible (WAI, 2008). Research in this area has provided recommendations on designing accessible websites (Cavanaugh & Cavanaugh, 2002; Acquaro, 2004; Sibuma et. al., 2005), but few have documented whether the sites are truly accessible.

In practice, universities divide website responsibilities into a hierarchical system in which departments or specialized academic units are charged with creating and maintaining information specific to their students and programs. However, when attempting to identify research on the quality of individual department websites, which often house the relevant information specific to students within a program, there is little to no documented empirical research available. One published study was identified in the discipline of family sciences. This study aimed at identifying 1) the information potential graduate students would seek on a department website, and 2) determining the extent to which current department websites meet those expectations (Hans, 2001).

The purpose of this research, in distinction to previous studies, is 1) to identify, by means of an extensive review of literature and review of existing doctoral program websites, the information prospective doctoral students in the area of educational technology seek on doctoral program websites, 2) to examine the extent to which the current doctoral program websites in the area of educational technology include the information, and 3) the degree to which the websites are accessible to disabled students. The goals of this research are to gauge the status quo of the educational technology doctoral program websites in an effort to assist programs in improving their doctoral program websites.

Theoretical Framework

Information Availability

To address the first purpose of this research (identifying information prospective doctoral students seek), a review of existing research literature on factors that influence graduate student decisions (Kallio, 1994; Pock & Love, 2001) and a review of ten existing doctoral program websites in educational technology were used. The review of current doctoral program websites informed this process in how these factors are currently manifested on websites.

Pock and Love (2001) identified 27 important factors that influence doctoral student enrollment in higher education programs from a sample of 125 doctoral students. Kallio (1994) identified 31 factors that influence enrollment decisions in graduate programs from a sample of 2,834 students admitted into masters and doctoral programs. Those factors that could manifest themselves in a web presence were incorporated into the framework. Some factors were not incorporated into the framework because they were outside the control of a program. For instance, input from a spouse/partner (Pock & Love, 2001) may be an important factor for a student enrollment decision, but this factor is outside the control of a program.

The factors were structured into a content framework that includes six content dimensions: admissions; curriculum and offerings; program requirements; student opportunities, resources, and financial assistance; program reputation; and faculty credentials. The result of this synthesis process is shown in and includes the content dimensions, the researchers and the factors. Each of these content dimensions was traced to items that could be available on websites to assist student decision-making.

Table 1

Student enrollment factors in graduate education.

Content Dimension | Kallio, 1994 | Pock & Love, 2001 |

Admissions | Admissions process and policies | Speed of acceptance Ease of admission process |

Curriculum and Offerings | Diversity of course offerings Particular field of study available Geographic location | Availability of evening classes Diversity of course offerings Location |

Program Requirements | Program structure and requirements Length-of-time to degree Ability to pursue studies part-time Ability to continue in current job | Flexible program requirements Able to continue working in job Able to pursue studies part time Time required to complete program |

Student Opportunities, Resources, and Financial Assistance | Social cultural opportunities Library and facilities collections Research and computer facilities Sensitivity to minorities and others Research opportunities Opportunities to teach | Amount of assistantship stipend Opportunity for assistantship Library and facility collections |

Program Reputation | Institution’s academic reputation Value of degree Size of the department Quality of students in program Post graduate job placement | Reputation of program Academic accreditations Faculty-to-student ratio Input from students in program Input from employer Rigor of program |

Faculty Credentials | Reputation of department’s faculty Quality of teaching Opportunity to work with faculty | Reputation of faculty |

Curriculum and Offerings: The curriculum and offerings dimension refers to the diversity of courses available within the curriculum, the frequency upon which they are offered, and the flexibility of the course offerings (e.g., night classes or distance options). Pock and Love (2001) and Kallio (1994) discussed the diversity of courses offerings, and location as relevant factors. Location was included in this dimension to capture the availability of distance learning options. Pock and Love (2001) also emphasize the availability of course offerings, while Kallio (1994) highlights particular areas of study available.

Program Requirements: The program requirements content dimension addresses the structural and time requirements of the program, such as residency requirements. Pock and Love (2001) and Kallio (1994) both emphasize that the length-of-time to completion, and flexibility of program to permit prospective students to work part-time or pursue their current positions as important factors.

Student Opportunities, Resources, and Financial Assistance: Student opportunities, resources, and financial assistance describes the opportunities for students to attain financial assistance (e.g., fellowships or assistantships), the openness to international and minority students, opportunities for research and teaching, and the facilities available to students. Pock and Love (2001) and Kallio (1994) both highlight the importance of facilities. Kallio (1994) addresses social or cultural, minority, and international student opportunities as well as general research and teaching opportunities. Pock and Love (2001) emphasize the importance of assistantship opportunities as well the award amounts.

Program Reputation: The program reputation content area refers to the dimensions that define academic prestige and a program’s reputation in the academic and professional community. Pock and Love (2001) and Kallio (1994) state that the reputation of the program is an important factors in enrollment decisions. More specific items include the value of a degree, size of the department, quality of the students within the department, accreditation, job placements, faculty-to-student ratios, and input from students within the program and employers (Kallio, 1994; Pock & Love, 2001).

Faculty Credentials: The faculty credentials dimension addresses the relevance of the faculty in influencing student choice. Both Pock and Love (2001) and Kallio (1994) underscore the reputation of faculty as a factor. Kallio (1994) adds the quality of teaching and the opportunity to work with a specific faculty member as key factors. Faculty research areas and specializations play an important role in student choice at the doctoral-level.

Accessibility

Evaluating the accessibility of websites involves many different approaches ranging from color blindness checks to automated online validation of markup. The authoritative canon governing web accessibility, as previously noted, is the Web Accessibility Initiative (WAI) guidelines published by the W3C. Included in the WAI resources is a suite of tools to assist web developers and publishers in evaluating the accessibility of their site. One such tool was developed by the Adaptive Technology Resource Center at the University of Toronto, known as the Web Accessibility Checker (WEC).

The WEC provides automated validation of accessibility of a website using the WAI and Section 508 guidelines along three levels of severity: known problems, likely problems, and potential problems (WEC, 2008). Known problems include basic dysfunctional elements such as an image tag not including the alternative text used by screen reading software and are detected with certainty. Likely problems refer to functional problems such as an image tag containing non-descriptive information that must be verified using the software tool. Finally, potential problems are things that the software cannot check for certainty. For example, using an alternative description for an image that is too lengthy and potentially unnecessary requires the manual judgment of a web developer or publisher.

Method

Instrument

Using the theoretical framework as a guide, an instrument was developed to document the presence of relevant content dimensions on the doctoral program websites. The instrument has 73 items and is organized into six different sections along with general information (e.g., URL of the doctoral program). All of the items are dichotomously scored using “Present” and “Not Present” with a “Not Applicable” option used in cases where the information was not consistent with the program’s structure. For example, some programs may not require a letter of intent for admissions into the doctoral program.

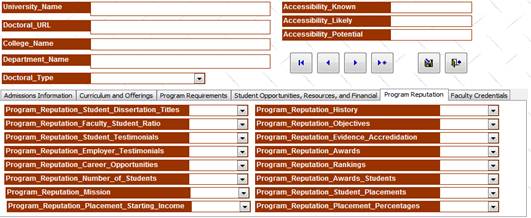

Figure1. Doctoral program website collection instrument

To facilitate the data collection process, the instrument was implemented using a Microsoft Access © database and its form features. A screen shot of the instrument is shown in Figure 1. The data was coded by four different raters with a cumulative inter-rater reliability of 82%. The K-R 20 measures of internal consistency reliability are shown in Table 2. The program reputation and student opportunities, resources, and financial assistance content dimensions did not exhibit high internal consistency reliability (K-R 20 > .7) for these data.

Table2

Internal consistency reliability for content dimensions

Content Dimensions | Items | K-R 20 |

Admissions | 13 | 0.79 |

Curriculum and Offerings | 9 | 0.70 |

Program Requirements | 12 | 0.78 |

Student Opportunities, Resources, and Financial Assistance | 14 | 0.60 |

Program Reputation | 16 | 0.56 |

Faculty Credentials | 9 | 0.77 |

Data Source

The websites used in this investigation were published to the Degree Curricula in Educational Communications and Technology, a specialized information directory maintained by the Association of Educational and Communication Technology (AECT). The directory includes a wealth of curricula related information, open to the public, to help define the contours of the discipline. The directory also includes a link to institutions that currently have doctoral programs. This information was used to define the boundaries of the dataset. Any institutions that did not indicate a doctoral program were not included in the data collection efforts. Only institutions within the United States were included in the analysis.

The data was merged with information published to the Carnegie Classification website (Carnegie, 2008). The resulting sample included 50 doctoral program websites. Fifty percent of the doctoral programs included doctor of philosophy degrees, 24% offered doctor of education degrees, and the remaining offered both degree programs. Eighty-two percent of the sample was public institutions and the remaining was private. Forty-four percent of the programs were in institutions classified with “very high research activity,” 36% were classified as “high research activity,” 6% were classified as masters colleges and universities large, and the remaining had a broad doctoral research university classification.

Procedures

To define the boundaries of the research, some assumptions had to be made in the execution of the data collection. First, the definition of a doctoral program website is the Uniform Resource Locator (URL) of the academic unit (e.g., department of educational technology and leadership) website that offers the doctoral degree. In many cases, the program information was shared with other programs, in which general admissions pages are provided. The information had to be available from the academic unit URL. All the relevant information had to be available within the sub domain or one link away from the academic unit URL. Thus, for example, linking to a general graduate school website that included admissions deadlines was acceptable given that the URL was only one click away.

Second, the WEC was applied to only the first page of the program website. Third, not all information was available for each item, and thus a 50% threshold was set to code the data. For example, a doctoral program might have 16 different courses listed, and provide example syllabi for only 8 of those courses. In this case, the 50% threshold was reached and the institutions would have received a “Present” for the availability of example course syllabi. These assumptions were made to make the coding consistent and manageable.

Results

Admissions Information

The majority of doctoral programs in educational technology-related disciplines included an admissions contact within the program website as well as an email address, and mail-point. Seventy-two percent of the program websites posted Graduate Record Examination (GRE) requirements, 72% requested all transcripts from previous institutions, and 64% included specific grade-point average (GPA) requirements.

Thirty-six percent of the programs requested professional statements from potential students, and 26% requested a letter of intent. Only 58% of the doctoral program websites included admissions deadlines on their program website, and a mere 22% included an admissions timeline indicating how long it would take for program decisions to be made.

Courses and Offerings

The courses and offerings content dimension was one that was particularly influenced by the 50% threshold criteria. Some programs did include, for example, student products or example assignments as a way to illustrate the types of skills and knowledge that would be acquired upon completion of the program. However, 50% of the courses had to do this in order to be counted, and none met this criterion. As can be gleaned from Figure 3, most programs included course titles, descriptions and credit hours on their websites. Substantially fewer schools provided a timetable of when these courses would be offered (36%), example syllabi (12%), and clearly noted distance learning offerings (22%).

Program Requirements

Doctoral programs vary widely in their structure and requirements. Seventy percent or more of the doctoral program websites evaluated included residency requirements (76%), a specified number of dissertation hours (72%), qualifying examinations (70%), and a required course sequence (70%) as shown in Figure 4

Figure2. Percentage of Websites including Admissions information

Figure 3. Percentage of Websites including Courses and Offerings information

Thirty-four percent of the program websites provided example completion sequences, from the start of the program to completion of dissertation. Twenty-two percent of the programs specified a maximum completion time, and 12% provided the average time it takes for a student to complete the program. Only 14% of the websites outlined potential minors/cognates and the requirements for the minors, and only 8% of the websites provided a demographic profile of the students currently enrolled in the programs.

Figure 4. Percentage of Websites including Program Requirements information

Student Opportunities, Resources, and Financial Assistance

Perhaps the most important area influencing a student’s decision to enroll in a doctoral program is the type and quantity of resources available. Forty-two percent of the program websites included research assistantship opportunities, 34% outlined fellowship opportunities, and 24% outlined teaching assistantships available to students in the program. However, none of the program websites outlined the percentage of students currently receiving financial assistance within the program.

Thirty-six percent of the program websites included links to affiliated production and research centers within the institution, and 36% highlighted computer resources or labs available to students. Forty-two percent of the websites provided links to relevant professional associations (e.g., Association of Educational and Communication Technology), and 22% included links to relevant student associations. Only 8% of the websites included evidence of student presentations and publications, and none of the sites included any information about student patents that had been awarded. Only 16% of the sites included any notable international student information, and fewer included any information relevant to minority students (12%).

Figure 5. Percentage of Websites including Student Opportunities, Resources,

and Financial Assistance information

Figure 6. Percentage of Websites including Program Reputation information

Program Reputation

A program’s reputation can be presented in many different ways on a doctoral program website as shown in Figure 6. Fifty-four percent of the program websites evaluated included a mission statement, 44% provided specific program objectives, and 36% provided a short history of the program. Only 20% of the program websites provided accreditation information, and 6% provided academic ranking information. Twenty percent of the program websites described potential career opportunities upon completing the program, yet only 8% listed student placements, only 4% placement percentages, and none included employer testimonials or starting salary information.

Most doctoral program websites did not include much ancillary information about the program’s reputation. A mere 4% of the programs highlighted the number of students currently in the program, and only 2% provided a faculty-to-student ratio. Eight percent of the doctoral program websites included students testimonials about the program, 8% also listed the dissertation titles of graduates and awards that had been conferred to the program, and 2% highlighted specific awards that had been acquired by students within the program.

Faculty Credentials

As shown in Figure 7, most doctoral program websites provided the names of the faculty members (94%) within the program, faculty email addresses (88%), faculty pictures (78%), and the faculty research areas and specialties (78%). Links were provided to faculty websites on 66% of the websites examined and faculty rank was available on 64%. Forty-eight percent of the websites included faculty publications and 30% highlighted faculty awards. This section included most of the relevant information relative to other content dimensions.

Figure 7. Percentage of Websites including Faculty Credential information

Website Accessibility

Accessibility appears to be a major problem area for doctoral program websites in educational technology-related disciplines. The doctoral program websites ranged in known accessibility errors from zero to 68 with an average of 10.86 (SD=14.91) on each program website. The average number of likely errors was 3.16 (SD=8.3) and ranged from 0 to 50 on the main program website. Finally, the range of potential errors was from 11 to 242 with an average of 107.64 (SD=51.55) on each doctoral website. The types of problems ranged from the missing alternative text descriptions for images to the use of tables for formatting that make it difficult for screen reading software to read the textual information.

Discussion

Interpretation of these results must be considered in light of the limitations of this research. This research measured only the information available in doctoral program websites at one instance of time, so it provides more of a picture of the status quo. Additionally, from a data collection perspective, it was difficult if not impossible to discern whether the absence of information from a website meant it was not a program requirement (e.g., admissions) or whether the website simply did not include the information. Finally, this research did not investigate the usability or navigability of the program websites – only the availability of the information.

So, what can be concluded from this research? One of the primary purposes of this research was to document the type of information that should be made available on doctoral program websites in educational technology-related disciplines. This research resulted in six content dimensions with more than 73 specific pieces of information. The content dimensions include: admissions; curriculum and offerings; program requirements; student opportunities, resources, and financial assistance; program reputation; and faculty credentials. It is conceivable that these dimensions would be similar in other disciplines. Thus, future research should aim at extending and using the instrumentation developed in this research to document the status quo of other disciplines.

The results of the data collection efforts show that doctoral program websites vary widely in the type of information made available to potential and current students. Most doctoral program websites in educational technology provided much of the necessary admissions information, and the sites were also, relative to other content dimensions, stronger in highlighting the unique credentials of their faculty members. This is not to say that there is not room for improvement in these areas, but the websites contained much of the relevant information in these content areas.

Three content dimensions that beckon improvement include the courses and offerings, student opportunities, resources, and financial assistance; and, program reputation. Few doctoral program websites provided evidence of student scholarly products like conference presentations or publications. Further, many program websites did not include information to help students garner whether financial assistance would be made available to them while in the program. Finally, much more effort could be placed on highlighting the reputation of the programs. For instance, providing the titles of student dissertations and their academic placements would require minimal administrative effort.

The final purpose of this research was to gauge the accessibility of the doctoral program websites. This research provides strong evidence that doctoral program websites in educational technology do have major accessibility problems. This is especially problematic for our discipline, which emphasizes the value of web accessibility and assistive technology. At minimum, doctoral program websites should aim at correcting known accessibility problems on their program websites.

The authors do not want this research to communicate that our doctoral program websites are inadequate or do not meet the unique needs of potential students. Rather, the authors believe that there is substantial room for improvement on our doctoral program websites, and that we should be mindful of factors that influence student decisions when creating them. Finding similar research studies to compare with these results has been a fruitless effort. It is hoped that this paper will encourage more participation from researchers in addressing this important problem.

References

Abrahamson, T. (2000). Life and death on the Internet: To Web or not to Web is no longer the question. The Journal of College Admission, 168, 6-11.

Acquaro, P. (2004). Designing, testing & implementing a truly accessible college website. In L. Cantoni & C. McLoughlin (Eds.), Proceedings of World Conference on Educational Multimedia, Hypermedia and Telecommunications 2004 (pp. 5231-5234). Chesapeake, VA: AACE.

Carnegie Classification of Institutions of Higher Education (2008). The Carnegie Foundation for the Advancement of Teaching. Retrieved on March 3, 2008 from: http://www.carnegiefoundation.org/classifications/.

Cavanaugh, C. & Cavanaugh, T. (2002). College website review and revision. In C. Crawford et al. (Eds.), Proceedings of Society for Information Technology and Teacher Education International Conference 2002 (pp. 33-34). Chesapeake, VA: AACE.

Hans, J. D. (2001). The Internet and graduate student recruiting in family science: The good, the bad, and the ugly department websites. Journal of Teaching in Marriage and Family, 1(2), 65 – 76.

Kallio, R. E. (May-June, 1994). Factors influencing the college choice decisions of graduate students. Paper presented at the Annual Forum of thee Association for Institutional Research, New Orleans.

Pock M. C., Love P. G. (2001). Factors influencing the program choice of doctoral students in higher education administration. NASPA Journal, 38(2), 203-223.

Poock, M., Andrews, B. V. (2006). Characteristics of an effective community college web site. Community College Journal of Research and Practice, 30(9), 687-695.

Poock, M. C., Lefond, D. (2003). Characteristics of effective graduate school web sites: Implications for the recruitment of graduate students. College and University, 78(3), 15-19.

Poock, Michael C. & Lefond, Dennis (2001). How college-bound prospects perceive university web sites: Findings, implications, and turning browsers into applicants. College and University, 77(1), 15-22.

Section 508 (2008). Retrieved on February 5, 2008 from http://www.section508.gov/.

Sibuma, B., Boxer, D., Acquaro, P. & Creus, G. (2005). Accessibility of a graduate school website for users with disabilities: developing guidelines for user testing. In P. Kommers & G. Richards (Eds.), Proceedings of World Conference on Educational Multimedia, Hypermedia and Telecommunications 2005 (pp. 1178-1179). Chesapeake, VA: AACE.

Web Accessibility Checker (2008). Adaptive Technology Resource Center at the University of Toronto. Retrieved on February 5, 2008 from: http://checker.atrc.utoronto.ca/.

Web Accessibility Initiative (2008). World Wide Web Consortium (W3C). Retrieved on February 5, 2008 from: http://www.w3.org/WAI/.

About the Authors

Albert Ritzhaupt Ph.D. is Assistant Professor in the College of Education at the University of South Florida. He is a specialist in Instructional Technology, Computer and Information Sciences, and technology integration in K-12 and higher education. | |

William Shore, Steven Eakins, |