| November 2009 Index | Home Page |

Editor’s Note: This study provides in-depth analysis of the literature related to instructional presence and critical thinking on the context of distance learning. It compares models of inquiry and conducts a simple study to determine the role of teaching presence to foster critical thinking in an online conference.

Empirical Study of Teaching Presence and Critical Thinking in Asynchronous Discussion Forums

Deepak Prasad

Fiji Islands

Abstract

The discussion forum is a significant component of online courses. Instructors and students rely on these asynchronous forums to engage one another in ways that potentially promote critical thinking.

This research investigates the relationships between critical thinking and teaching presence in an asynchronous discussion environment through quasi-experiment, pre-test-post-test design. The results demonstrate that when teaching presence was increased in the discussion forum there was a significant increase in learners’ level of critical thinking.

Keywords: Critical thinking, teaching presence, asynchronous discussion forum, online learning, Moodle, content analysis, validity, community of inquiry, quasi-experiment

Introduction

Today critical thinking is recognized as one of the main goals in education (Schafersman, 1991; Garrison, Anderson, & Archer, 2000; MacKnight, 2000; Moore, 2004; Perkins & Murphy, 2006; Arend, 2009), yet many educators are confused about what it means and how to develop critical thinking in academic settings. For example, educators often proclaim that they want their learners to use critical thinking skills, but instead focus learner efforts on rote learning (McKeachie, Pintrich, Lin, & Smith, 1986; Duron, Limbach, & Waugh, 2006). Time and again critical thinking is seen as isolated goal unrelated to other important goals of higher education, but rather it is an influential goal which, done well, simultaneously improves the thinking skills of learners and thus better prepare them to succeed in the world (Ennis, 1992). In this digital age, with the number of online courses increasing every day the issue of ways for teaching learners how to think, instead, of teaching them what to think when teaching subjects is most widely debated topic amidst the educational community. It is an important issue because online courses in higher education are no different to on-campus courses in the goal of improving critical thinking among students.

Like on-campus learning, online learning has transformed into a learner-centered constructivist environment from a teacher-directed and static content environment (Lock & Redmond, 2006). Online learning is learning and teaching by means of advanced learning technology. Most online learning situations use combination of learning technologies. An example of this is Moodle, which has the capacity to use discussion board, wiki and real time textual chat. Discussion board is an asynchronous text-based computer-mediated communication tool. Unlike, on-campus learning, in online learning, teachers and students seldom meet face-to-face and facilitating interactive discussions is often challenging. As if sent by God, discussion board is a promising tool to cope with this problem. Asynchronous discussion forum provides teachers and learners with most opportunities to engage one another in ways that can potentially promote critical thinking in an online course (Kanuka & Anderson, 1998; Jonassen, 1994; Buraphadeja & Dawson, 2008, Arend 2009). Murphy (2004, p. 295) argued, “although asynchronous conferencing might afford or support opportunities for engagement in various cognitive processes such as critical thinking, it does not guarantee it”. As in the case with face-to-face discussions, untested teaching methods and techniques in online discussions can trigger uncertainty in the practice of best methods for improving critical thinking (Arend, 2009).

Understanding the constructivist model of learning provides insights into directions to take to promote critical thinking in an online course. The basic premise of constructivism is that learners “construct their own understandings through experience, maturation, and interaction with the environment, especially active interaction with other learners and the instructor” (Rovai, 2007, p.78). In addition, “social constructivism reminds us that learning is essentially a social activity, that meaning is constructed through communication, collaborative activity, and interactions with others” (Swan, 2005, p. 5). Supporters of constructivist theory often cite that online asynchronous discussions support learners in various cognitive processes, such as critical thinking through engagement with other learners and the instructor. However, Murphy (2004) cautioned that engagement cannot be derived from a context of use of a medium of communication, but instead, as a consequence of 3 factors, such as: (1) instructional design of online asynchronous discussion, (2) the standards set by the moderator of the discussion, and (3) the character of interactions between discussants and, as well, the issue or topic under consideration. The literature suggests that a number of researchers have analyzed discussion forum transcripts to investigate various cognitive processes, such as problem solving (Murphy; 2004), knowledge construction (Kanuka & Anderson, 1998), and critical thinking (Garrison, Anderson & Archer, 2001; Meyer, 2003; Goodell & Yusko, 2005).

Generally, efforts to measure critical thinking in online discussions have produced mediocre results. Maurino’s (2007) review of research of critical thinking in online threaded discussion demonstrated lower critical thinking levels of messages in 37 studies. Maurino recommended more research on the instructor’s viewpoint. She stated that further instructor involvement is indicated in much on the research, but most studies have been focused on students rather than instructor. This scenario divulges the need to investigate and develop models and frameworks to fathom the intricate disposition of learning and teaching in the online environment. One such widely researched model based on constructivist principles is Community of Inquiry framework developed by Garrison, Anderson, and Archer (2000). The Community of Inquiry framework is comprised of three overlapping elements: social presence, cognitive presence, and teaching presence. Reviews of research within the Community of Inquiry framework have drawn similar conclusions as Maurino’s (2007) study. For example, in Garrison and Arbaugh (2007) review of research done within the Community of Inquiry framework, they noted that, while learning does indeed occur in the context of online discussion, few studies show evidence that it moves to the higher levels of critical thinking. Swan, Shea, Richardson, Ice, Garrison, Cleveland-Innes, & Arbaugh (2008) as well as Garrison (2007) suggested that this may have something to do with teaching presence. In the Community of Inquiry framework, teaching presence has three components: (1) instructional design and organization, (2) facilitating discourse, and (3) direct instruction. These categories align well with the 3 factors identified by Murphy (2004) as aforementioned. According to Murphy, engagement in asynchronous discussion forum is derived from these 3 factors. The three categories of teaching presence are closely aligned with those identified by Murphy. This indicates that teaching presence can stimulate engagement in asynchronous discussion forums. According to the constructivist theory, meaning (critical thinking) is constructed through communication (engagement) and since teaching presence supports engagement, it can be concluded that teaching presence may support critical thinking. Yet, the question, “what is the effect of teaching presence on learners’ level of critical thinking”, remains largely unanswered due to the fact that to date there has been no empirical study to confirm this.

Therefore, in this study it is hypothesized that, if critical thinking is related to teaching presence, then exposing learners participating in the asynchronous discussion forums to high teaching presence will result in high level of critical thinking. This paper explores the learners’ perception of the asynchronous discussion forum.

The remainder of this paper is arranged in five sections. The first section reviews the literature. The second section discusses the theoretical framework of this study. Section three describes the background of the study and methods used to design the experiment, and the validity of the content analysis. The paper concludes by discussing the findings and their implications for those interested in researching online learning, with particular emphasis on future directions for increasing teaching presence and critical thinking in asynchronous discussion forums.

Literature Review

The importance of critical thinking in education

Critical thinking is generally recognized as an important skill, and one that is primary goal of education (Schafersman, 1991; Garrison, Anderson, & Archer, 2000; MacKnight, 2000; Moore, 2004; Perkins & Murphy, 2006; Arend, 2009). Educators concern with critical thinking is not new, over the last 30 years education pioneers have focused on critical thinking skills more than ever on premises that knowing how to think is more important than knowing what to think in the age of information (Özmen, 2008). The value of critical thinking was evident among 40,000 faculty members in a 1972 study in which 97% of the respondents indicated that the most important goal of education is to foster students’ critical thinking ability (Paul, 2004). With the increasing acceptance of the importance of critical thinking, educational institutes have added the requirement to encourage critical thinking to their graduate profiles (Corich, 2009).

Critical thinking remains highly valued in all fields of study. For instance, it is required in the workplace, it can help you to deal with mental and spiritual questions, and it can be used to evaluate people, policies, and institutions, thereby avoiding social problems (Duron, Limbach, & Waugh, 2006). A similar statement by Özmen (2008) is that “critical thinking in educational settings is crucial for establishing infrastructure of democratic societies, and of a new generation whose life is based on scientific thinking in lieu of medieval remains of thinking and living habits” (p. 119). No doubt educators agree that critical thinking is an important goal of education.

Cultivating critical thinking through asynchronous discussion forums

Given the importance of critical thinking in education as mentioned above, how can online discussion forums be used to facilitate critical thinking? Despite the ever-increasing popularity and prospective value of online asynchronous discussions, there is still no clear indication of how online asynchronous discussion can be utilized to promote critical thinking among students (Perkins & Murphy, 2006; Bullen, 1998). According to Lewinson (2005), the extent and way in which asynchronous discussion forums are being used in the online courses has branched off in different directions. For example, it may be a primary component of an online course serving as a virtual classroom, or it may only serve as a supplementary resource to other learning technologies. Moreover, according to Lewinson (2005), in either of these instances asynchronous discussion forums may be extremely structured as instructors provide specific questions for learners to address, or it may be extremely unstructured whereby it may serve as a forum for community building among learners.

Online asynchronous discussion is argued to have many benefits for student learning, such as helping learners negotiate higher levels of understanding and sharing and developing alternative viewpoints in a flexible environment (Rovai, 2000; Berge, 2002; Garrison, 1993). It is learner centered; therefore, it is not dominated by instructor contributions (McLoughlin & Mynard, 2009). For instance, some studies show that most online discussions contain instructor contributions of only 10% to 15% (McLoughlin & Mynard, 2009). In addition, participating in online asynchronous discussion is convenient because they are neither time or place dependent (Hew & Cheung, 2003) and does not require all participants to be online simultaneously. Asynchronous communication allow learners some control while increasing ‘wait-time’ and general opportunities for reflective learning and processing of information (Hara, Bonk, & Angeli, 2000). Moreover, asynchronous online discussion can be considered as a means to enhance student control over learning and make the educational experience more democratic (Harasim, 1989). Many instructors report that online discussions benefit shy or native students by allowing them an opportunity to read and develop their remarks and to think critically (Bhattacharaya, 1999; Chickering & Ehrmann, 1996; Warschauer, 1996). In addition, discussion-boards store a permanent record (Meyer, 2004; Cheong & Cheung, 2008) of interaction that is easy to archive, search and evaluate.

Despite the benefits of online asynchronous discussion, some potential obstacles may hinder its effective use. A discussion forum is very often an add-on after transmitting content to the learners and initially it is quite challenging to motivate students to participate, largely due to the unstructured nature of the process (Grandon, 2006). According to Grandon, active participation only occurs when the discussion task is graded. Rovai (2007) concurs with Grandon’s assertions that grading strategies increase students’ extrinsic motivation to interact and this results in a significant increase in the number of student messages per week for courses in which discussions were graded. On the contrary, Knowlton (2005) argued that graded discussion impedes students from active participation and freely reflecting on others’ contribution, rather students only participate in order to attain the minimum standards. Along the same line of reasoning, Ou, Ledoux, and Crooks (2004) insisted that “if the instructor is totally absent from the discussions, learners might feel abandoned and be satisfied with providing superficial responses to the task as assigned in order to get a grade” (p. 2989). Some students also rebel against graded discussions as they believe that graded discussions impinges on “free and open participation in the discussion” (Warren, 2008, p.3). Moreover, poor online discussion may result from poorly designed discussion topics, and infrequent or non-existent, irrelevant or negative instructor feedback (Whittle, Morgan & Maltby, 2000). Another potential weakness is loss of social cues that occur in face to face interactions, which have energy and nearness that is significant to some instructors and learners (Meyer, 2003). In contrast, Chen and Chiu (2008) argued that words and symbols can be used in online discussions to express social cues. According to Chen and Chiu (2008) words and symbols can convey

positive feelings (‘‘I’m feeling great . . .’’, jokes, symbolic icons like ‘‘:)’’ or ‘‘-)’’ [emoticons]), compliments (‘‘You are so smart!’’; Hara et al., 2000), thanks (‘‘Thanks for your answer!’’), and so on. Or, they can express negative feelings such as anger (‘‘My solution is not wrong!!!!!’’), regret (‘‘I should have learned it before . . .’’), shyness (=^___^= [blushing]), apologies (‘‘I’m sorry for having given you the wrong answer.’’), and condescension (‘‘your answer is ridiculous.’’) (p. 681).

Although asynchronous discussion has its weaknesses, Rovai (2007) asserted that skillful facilitation of the online discussions by the instructor can decrease and even eliminate these weaknesses. Throughout the literature in this area, it is generally claimed that critical thinking can be taught through online discussion (MacKnight, 2000). In fact, thinking is a natural process, but left to itself, it is often biased, distorted, partial, uninformed, and potentially prejudiced; excellence in thought must be cultivated (Scriven & Paul, 2007). Schafersman (1991) indicated that learners are not born with the ability to think critically; neither do they develop this naturally beyond survival level thinking. Schafersman argued that peers and most parents cannot reliably teach critical thinking. Instead, he concluded that trained and knowledgeable instructors are essential for teaching critical thinking.

By contrast, other researchers (Li, 2003; Mazzolini & Maddison, 2003) have reported that peer messages are more effective than instructor messages at motivating discussion and that instructor presence can in fact shut down dialogue. Similarly, Gagne, Yekovich, and Yekovich (1997) claimed that peer influence has greater impact on learning than instructor influence. On the other hand, Kay (2006) argued that instructor presence is required to correct misconceptions that spring up early on in the learning process. Likewise, it should never be presumed that students know how to effectively participate in discussion forums (Ellis & Calvo, 2006). In the midst of such claims, MacKnight (2000) explained that it should not be assumed that all learners will come with necessary skills to advance in an online discussion, nor it should be assumed that instructors have sufficient skills and practice in monitoring discussions or skills in creating productive communities of online learners. He suggested that both may need training and support. Arend (2009) reported that, critical thinking appears to be best among learners when a more consistent emphasis is placed on the discussion forum, and when instructor facilitation is less frequent but more purposeful. From the foregoing discussions, one can hypothesize that a proficient instructor can cultivate critical thinking within asynchronous discussion forums through instructional design and organization, facilitating discourse, and direct instruction. This being established, together with the stated importance of critical thinking in education, it is vital to have a clear understanding of what is critical thinking.

Critical thinking: what is it?

What is critical thinking? Actually, that is a critical question. Most scholars say that critical thinking is “good thinking”. The idea of critical thinking has been a highly debated concept among the education communities in the recent years. An investigation of the literature reveals various definitions of critical thinking.

The concept of critical thinking can be traced back to the beginning of the twentieth-century. John Dewey’s (1933) theory of practical inquiry included three situations – pre-reflection, reflection and post-reflection. He defined reflective thinking as “active, persistent, and careful consideration of any belief or supposed form of knowledge in the light of the grounds that support it and the further conclusions to which it tends” (1933, p. 9). Dewey believed that education must engage with and enlarge experience and that an educator’s role was to encourage students to think and reflect. Another major historical source of critical thinking during the mid twentieth-century was Benjamin Bloom’s (1956) cognitive taxonomy of educational objectives. The upper end of Bloom’s intellectual scale, analysis, synthesis, and evaluation, is often equated to critical thinking (Kennedy, Fisher, & Ennis, 1991; Gokhale, 1995).

Facione (1984, p. 260) claimed that “critical thinking is an active process involving constructing arguments, not just evaluating them”. He refers to a set of preliminary skills that can enable students to construct arguments:

Identifying issues requiring the application of thinking skills informed by background knowledge;

Determining the nature of the background knowledge that is relevant to deciding issues involved and gathering that knowledge;

Generating initially plausible hypotheses regarding the issues;

Developing procedures to test these hypotheses, which procedures lead to the confirmation or disconfirmation of those hypotheses;

Articulating in argument from the results of these testing procedures; and

Evaluating arguments and, where appropriate, understandings developed during the testing process. (p. 261)

According to this definition, critical thinking is a process of building arguments for problem solving. Similarly, Scriven and Paul (2007, Defining Critical Thinking, ¶1) defined critical thinking as a set of macro-level logical skill. They stated that “critical thinking is the intellectually disciplined process of actively and skillfully conceptualizing, applying, analyzing, synthesizing, and/or evaluating information gathered, or generated by, observation, experience, reflection, reasoning, or communication, as a guide to belief and action.”

However, not all educators agree with macro-level definitions of critical thinking. Some educators prefer to take a more micro-level approach. For example, Beyer (1985, p. 303) argues that critical thinking is not a process “at least not in the sense that problem-solving or decision-making are processes; critical thinking is not a unified operation consisting of a number of operations through which one proceeds in a sequence”. Beyer and others (Rudin, 1984; Fritz & Weaver, 1986) believed that critical thinking is a set of discrete skills. According to this explanation, students will have to choose and apply discrete skills.

Recently, Hanson (2003, p.203) took exception to both perspectives. Instead, she concluded that “a critical thinker has to engage not only with micro questions within the text, both at the superficial and the deep readings, but also with macro-issues surrounding topics” (p.203). She described that “core of critical thinking is the constant considered identification and challenging of the accepted”. Hanson posited that critical thinking:

involves the evaluation of values and beliefs as well as competing truth explanations and of course texts; it involves both rationality/objectivity and emotions/subjectivity; it involves the questioning of the very categories of thought that are accepted as proper ways of proceeding and to ensure that one always:

Searches for hidden assumptions;

Justifies assumptions;

Judges the rationality of those assumptions; and

Tests the accuracy of those assumptions. (p.203)

As mentioned above there are many definitions of critical thinking and choosing a single definition even from the few listed above is difficult. Some, such as Hanson (2003) focus on both macro and micro level logical skills. Others, such as Facione (1984), and Scriven and Paul (2007) focus is on macro-level logical skills. However, it seems irrational to except a single definition to encompass all the competences that might be displayed by critical thinkers. Tice (1999) pointed out that perhaps the reason that we struggle to decide upon a single definition of critical thinking is because it can not be narrowly contained. She asserted that definition of critical thinking varies according to context, and that does not indicate that we have been inconsistent in our definition. Lastly, Tice concludes that a core element of critical thinking is that it varies by context and we should accept the ability to tolerate ambiguity and to distinguish among several shades of gray is an important characteristic of critical thinking.

Many different definitions of critical thinking is one of the problems plaguing research in this area since most educators have spent more energy on defining critical rather than working on ways to improve it. That is why this paper questions the relationship between teaching presence and critical thinking within asynchronous discussion forums. It requires, not a definition of critical thinking, but instead, a model that focuses on supporting critical thinking in a completely on-line learning environment. As mentioned in the introduction of this paper, Community of Inquiry is one such model. This theoretical framework has grown in prominence and has been used in hundreds of studies over the last decade (Swan et al., 2008). Therefore, this study was constructed from the Community of Inquiry theoretical framework. The subsequent section describes the theoretical framework for this research.

Theoretical framework

Community of Inquiry framework

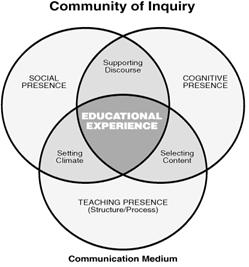

Garrison et al. (2000, p. 89), asserted that critical thinking is “a process and outcome that is frequently presented as the ostensible goal of all higher education” and developed a Community of Inquiry model (see figure 1 below) to guide computer-mediated communication to support critical thinking. They argued that deep and meaningful online learning occurs through the interaction of three core elements: social presence, cognitive presence and teaching presence. The genesis of this framework is found in the work of John Dewey and is consistent with the constructivist approaches to learning in higher education. From a theoretical perspective, there has been significant evidence attributing to the validity of Community of Inquiry framework (Garrison & Arbaugh, 2007; Swan et al., 2008; Shea & Bidjerano, 2009). Judging by the number of studies that have used the Community of Inquiry framework as a guide, the Community of Inquiry (CoI) framework was selected to serve as the theoretical framework of this study.

Figure 1. Community of Inquiry Framework

Adapted from Garrison, Anderson, and Archer (2000).

Within the structure of the CoI framework, cognitive processes such as critical thinking takes place as an element of cognitive presence. Garrison et al. (2000) emphasized that cognitive presence is the most significant element in critical thinking. They cited teaching presence as being most central to their framework, since “appropriate cognitive and social presence, and ultimately, the establishment of a critical community of inquiry, is dependent upon the presence of a teacher” (Garrison et al., 2000, p. 96). Garrison et al. (2000) explained that the teaching presence can be performed by either the teacher or the learner in a Community of Inquiry. However, they pointed out that in an educational environment, teaching presence is a primary responsibility of the teacher. Therefore, in this study teaching presence is considered as the primary responsibility of the teacher. Garrison et al. (2000) even hypothesized that the lack of teacher presence may result in decrease in cognitive process. This indicates that critical thinking that occurs in the element of cognitive presence is dependent on teaching presence. In the sections that follow, each of these two elements is described. The subsequent sections also examine categories and indictors to access teaching presence and critical thinking and to guide the coding of discussion forum messages. The process of coding is basically one of selective reduction, which is the central idea in content analysis. By breaking down the contents of materials into meaningful and pertinent units of information, certain characteristics of the message can be analyzed and interpreted.

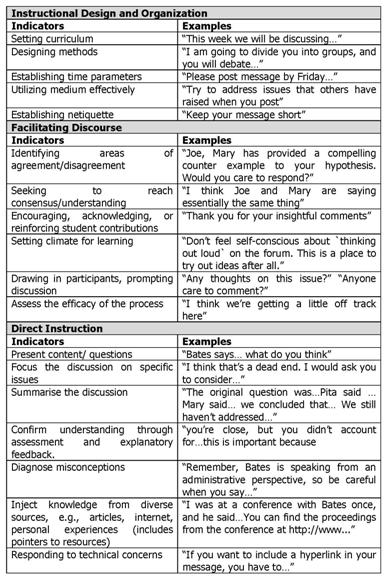

Assessing teaching presence

In the Community of Inquiry framework, teaching presence is defined as “the design, facilitation, and direction of cognitive and social processes for the purpose of realizing personally meaningful and educationally worthwhile learning outcomes” (Anderson, Rourke, Garrison, & Archer, 2001, p. 5). Anderson et al. (2001), conceptualized teaching presence as having three categories: (1) instructional design and organization, where instructors and/or course designers develop curriculum, activities, assignments and course schedules; (2) facilitating discourse, where instructors set climate for learning by encouraging and drawing students into online discussion; and (3) direct instruction, where instructors present content and focus and direct online discourse. They developed a template for guiding the coding of computer conference transcripts for assessing teaching presence. This particular template contained 3 categories and 18 indicators as shown in Table 1. Each indicator is accompanied with a sample sentence that shows certain key words or phrases.

Table 1

Teaching Presence coding template

Adapted from Anderson et al. (2001).

The validation issue of the 3 categories for assessing teaching presence has often debated how to practically make distinction between facilitation and direct instruction. Shea, Fredericksen, Picket and Pelz (2003), in their study of teaching presence and online learning concluded that 2 categories are more interpretable in practice. They labeled these 2 categories as design and directed facilitation. The authors conceptualized directed facilitation as a combination of facilitation and direct instruction. However, Arbaugh and Hwang’s (2006) study validated the 3 categories of teaching presence. Recently, in the review of Community of Inquiry model, Garrison (2007), questioned the differences in the validation of teaching presence construct in both these studies. He explained that the difference may be due to nature of the analysis. Garrison concluded that all 3 categories of teaching presence are distinct, however, highly correlated with each other; and students may not be able to distinguish between facilitation and direct instruction. Thus, the coding template of Anderson et al. (2001) was chosen for this study. Another reason for selecting this template is because it is one of the few computer-mediated communications coding schemes to measure teaching presence that has an existing research base.

Assessing critical thinking

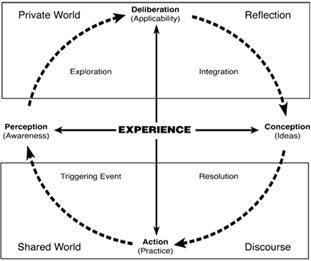

Garrison et al. (2000) described cognitive presence as the extent to which learners are able to construct and confirm meaning through sustained reflection and discourse in a critical community of inquiry. They asserted that, critical thinking takes place in the element of cognitive presence and is operationalized through the Practical Inquiry model (see Figure 2). Their Practical Inquiry model is rooted in Dewey’s (1933) foundational ideas of practical inquiry.

Figure 2. Practical Inquiry Model

Adapted from Garrison, Anderson, and Archer (2000).

In the Practical Inquiry model, the process of critical thinking is defined as cognitive activity geared to four consecutive phases: (1) triggering event, where some issue or problem is identified for further inquiry; (2) exploration, where students explore the issue, both individually and corporately through critical reflection and discourse; (3) integration, where learners construct meaning from the ideas developed during exploration; and (4) resolution, where learners apply the newly gained knowledge to educational contexts or workplace settings (Garrison et al., 2001).

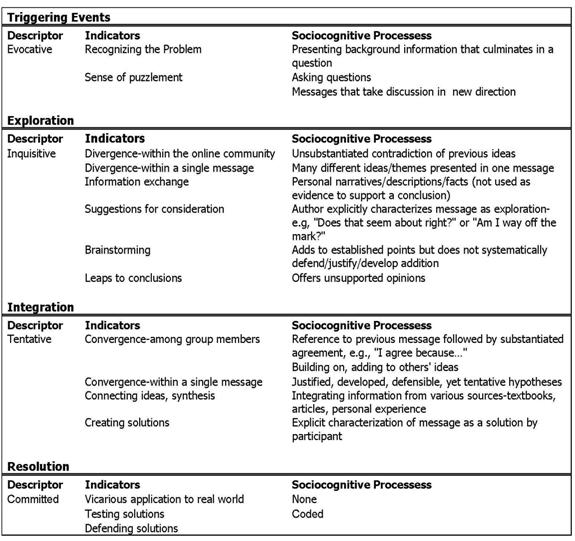

Garrison et al. (2001) developed a valuable template for guiding the coding of computer conference transcripts for assessing critical thinking process. They included descriptors, indicators, and sociocognitive processes in their template to provide sufficient guide for reliable categorization by coders. The guidelines for each of the categories are presented in Table 2.

Table 2

Cognitive Presence coding template

Adapted from Garrison et al. (2001).

The next section overviews content analysis methodology and discuss validity issues of content analysis.

Content Analysis and Validity

Content analysis is a systematic, replicable technique for compressing many words of text into fewer content categories based on explicit rules of coding (Krippendorff, 1980). Researchers have been using content analysis method for analyzing transcripts of asynchronous, text based, computer conferencing in educational settings (Rourke, Anderson, Garrison, & Archer, 2001, p. 2). Content analysis may be objective also know as manifest content when it involves counting the number of times a particular word or set of words is used or it may be subjective also known as latent content which depends on the rater’s interpretation of the meaning of what has been written (Meyer, 2004). However, not all research questions, and especially assessing computer-mediated communication for higher-order learning outcomes like critical thinking, can be answered by focusing on the manifest content (Rourke et al., 2001).

Moreover, Berelson (1952) characterizes content analysis as primarily a descriptive technique. Nonetheless, researchers often want to expand the content analysis technique from descriptive to inferential hypothesis testing. Several researchers have used inferential content analysis method and “were able to draw convincing conclusions concerning different experimental or quasi-experimental conditions” (Rourke et al., 2001, p. 6). Furthermore, unitizing is a process in content analysis that identifies the segments of the transcripts that would be recorded, categorized and considered. Rourke, Anderson, Garrison, and Archer (1999) identified five units of analysis that have been used in computer-mediated communication researches including sentence, proposition units, paragraph units, thematic units, and message units. They further stated that a message unit is most practical though researchers most commonly use thematic units. Similarly, Rourke et al. (2001) stated two important advantages of message units; firstly, it is objectively identifiable and secondly, it produces a manageable set of cases.

The validity of content analysis depends largely on the inter-rater reliability, “defined as the extent to which different coders, each coding the same content comes to the same coding decision” (Rourke et al., 2001, p. 4). There are a number of statistical methods that can be used to determine inter-rater reliability. However, Rourke et al. (2001) recommended Cohen's kappa (k) statistic to determine reliability. They explained that Cohen's kappa (k) is a chance-corrected measure of inter-rater reliability that assumes two raters, n cases, and m mutually exclusive and exhaustive nominal categories.

The formula for calculating kappa is:

k = (Fo - Fc) / (N - Fc)

Where: N = the total number of judgments made by each coder

Fo = the number of judgments on which the coders agree

Fc = the number of judgments for which agreement is expected by chance.

The exact level of inter-rater reliability that must be achieved has not been clearly established. However, for Cohen’s kappa, Capozzoli, McSweeney, and Sinha (1999, p. 6) stated that:

values greater than 0.75 or so may be taken to represent excellent agreement beyond chance, values below 0.40 or so may be taken to represent poor agreement beyond chance, and values between 0.40 and 0.75 may be taken to represent fair to good agreement beyond chance.

Finally, Cohen's kappa (k) was used to test for the inter-rater reliability of the content analysis. In addition, taking latent content and message as a unit of analysis, this study investigated the effect of high teaching presence on critical thinking.

Background information

This study was conducted at the University of the South Pacific (USP). USP is a regional university which serves twelve Pacific island nations (Cook Is., Fiji Is., Kiribati, Marshall Is., Nauru, Niue, Solomon Is., Tokelau, Tonga, Tuvalu, Vanuatu and Samoa). For the last thirty eight years USP has been offering courses and programmes via Distance and Flexible Learning (DFL) in a variety of modes and technologies. Currently, a Bachelor of Law programme is offered through DFL online mode enabled on Moodle (Modular Object-Oriented Dynamic Learning Environment) online learning management system. Moodle communication tools like chat, email, and discussion board are used for communication and interaction in many courses, depending on the course designer’s discretion.

So far, the tool that offers most prospects for within course communication and interaction is the asynchronous discussion board. This is because the twelve regional island nations that USP serves have varied time zones, infrastructure and technology, that affect the use of the Moodle chat feature. Hence, chat is often ruled out as an essential component for communication because it does not only demand synchronous communication, but it also draws heavily on the available bandwidth. Moodle email has no obvious advantages because it only allows students to email others within the same course. Besides, all USP students have an email account that they use for personal communication.

Use of asynchronous discussion forums in Law courses is very popular as it is perceived as a platform for the students to engage in debate with other students, and develop their argumentation skills and thought. In short, it is perceived as very prospective tool for promoting critical thinking among students participating in the online discussions.

For these reasons, an undergraduate online Law course was selected for this research. This course was 14 week long with 94 registered students. In this course 10% of total assessment was based on students’ online postings. At the beginning of each week the course instructor posted a discussion question and students were required to participate in discussions. The course instructor had over 2 years of online teaching experience and over 5 years of face-to-face teaching experience.

Research design and methods

Design

A quasi-experimental, one group pre-test-post-test design was used for this study. Quasi-experimental methods are categorized as quantitative research that has roots in positivism, which emphasizes facts, relationships and causes concerning the educational phenomena (Wiersma & Jurs, 2005). In the quasi-experimental method, the researcher deliberately manipulates or varies at least one variable to determine the effects of variation (Wiersma & Jurs, 2005). In this study teaching presence, the independent variable, was increased to determine the effect on students’ level of critical thinking.

Sampling

Stratified sampling technique was used, whereby all members of the population were ordered according to a single characteristic of members having access to internet daily. Availability of the internet to participants is considered vital in this study, as it eliminates the external variable of not able to participate in the online discussions due to unavailability of internet. Those members with daily access to internet were placed into 2 subgroups; males and females. From each group, 15 participants were selected using simple random technique. A total of 30 students participated in this study.

Procedure

An undergraduate 14 week long online Law course was used to conduct this research. In this course 10% of total assessment was based on students’ online postings. At the beginning of each week the course instructor posted a question in the discussion forum which was open for 7 days. This study was conducted from week 2 to week 6 of the course. At the beginning of week 2 of the course, a survey questionnaire was created in the Moodle course page. At the end of week 2 the survey questionnaire was analyzed and the participants for the research were selected using a stratified sampling technique as mentioned above. Pre-test period was during week 3. During this period the instructor participated in the forum in a normal manner. At the end of week 3, instructor and participant discussion postings were retrieved from the automatic computer-generated records of the Moodle discussion board. Two coders analyzed the transcripts to assess the teaching presence and critical thinking. Once the pre-test teaching presence and students level of critical thinking was analyzed, the course instructor discussed ways to improve and increase her presence in discussion forums by following the teaching presence coding template as a guide. During week 6 teaching presence was increased through more, encouragement, pedagogical comments and provided reinforcement and expert advice. After this intervention, discussion postings for instructor and participants during week 6 was retrieved and analyzed for teaching presence and level of critical thinking.

The secondary question of “learners’ perception of the asynchronous discussion forum” was explored through an open ended questionnaire created in a Moodle course page. The results and sample responses are discussed below.

Data Analysis

Teaching presence and critical thinking was analyzed using content analysis. Both the teaching presence and critical thinking variables were analyzed using 3 essential steps as outlined below.

The instructors and 30 participant’s postings was compiled, into text files. For example, one text file for instructor and one text file each for the 30 participants.

Two coders were trained to use the coding schemes (See table 1 and table 2) that have been adapted for identifying and categorizing the teaching presence and critical thinking variables. Message unit analysis was used in this research since it is most practical, less time consuming, and facilitates unit reliability (Anderson et al., 2001). In this research, the message was coded as either illustrating or not illustrating one or more indicators of the categories of teaching presence and critical thinking. For example, rather than simply assigning each message unit that demonstrated some sort of teaching presence to only one and only one of the categories of teaching presence, I allowed for the possibility that a single message might exhibit characteristics of more than one category.

The inter-rater reliability was determined using Cohen’s kappa.

Ethical consideration

Participation was entirely voluntary. Information about the study was given to every participant to assure the protection of human rights. The students had the opportunity to determine their willingness to participate in the study. A signed, informed consent form was obtained from each student after the intervention was applied. This was done to ensure students’ neutral responses to the intervention. The students were free to refuse to participate or withdraw from the study at any time without punishment. Confidentiality was ensured through the use of code numbers. The list of code numbers and associated names were kept separate from the actual data. The data obtained from the participants were used only for the study. The students were apprised that results would be submitted for publication.

Findings

Participant’s characteristics

A total of 30 subjects, 15 males (50%) and 15 females (50%), participated in this study. A 5 item questionnaire was created within the Moodle course page to collect descriptive data on participants. Results of the questionnaire revealed multiple age groups with a spread from 18 to 55-plus years. Participants were asked to indicate their marital status as well. 11(37%) were married and 19 (63%) were single. In terms of registration status, 10 (33.3%) indicated that they were enrolled full time, and the remainder were enrolled as part-time 20 (66.7%). Of the 30 participants, 29 (96.7%) had online learning experience while 1 (3.3%) had no previous experience with online learning. The variable academic level consisted of five categories: High school, Certificate, Diploma, Graduate, and Postgraduate. The distribution by academic level was: 64% High school, 13% Certificate, 3 % Diploma, 13% Graduate, and 7% Postgraduate.

Pre-test–post-test teaching presence

The instructor posted a total of 89 messages during pre-test and 154 message during the intervention period (post-test). Table 3 shows the frequency and percentage of teaching presence categories that were observed in the pre-test–post-test messages posted by the instructor. Percentages were calculated by dividing the total number of postings showing a given category of teaching presence by the total number of messages posted by the instructor. Direct instruction was the predominant category, with between 57.3% and 78.3% of all teacher messages including some form of direct instruction. Instructional design was the least frequently observed category of teaching presence, with between 33.7% and 40.3% of messages addressing instructional design. The total instances of teaching presence were calculated by adding the frequency of teaching presence categories. Total teaching presence during the pre-test was 123 and 328 during the intervention period. Total post-test teaching presence was 2.7 times more than the pre-test teaching presence.

Table 3

Pre-test and Post-test; Frequencies

and percentage of teaching presence classifications

| Pre-test | Post-test | ||

f | % | f | % | |

Instructional Design | 30 | 33.7 | 62 | 40.3 |

Facilitating Discourse | 42 | 47.2 | 118 | 62.4 |

Direct Instruction | 51 | 57.3 | 148 | 78.3 |

Total teaching presence | 123 | 328 | ||

Total instructor messages | 89 | 154 | ||

The inter-rater reliability for pre-test transcript was k = .79; for post-test transcript, inter-rater reliability was k= .82. Capozzoli et al. (1999), that values greater than 0.75 or so may be taken to represent excellent agreement beyond chance.

Pre-test–post-test critical thinking

During the pre-test a total of 76 messages with a mean of 2.53 messages were posted by 30 participants. The highest number of messages post by an individual participant was 4 while the lowest number of message posted by an individual participant was 1. Further analysis revealed that; 6 participants posted 1 message each, 8 participants posted 2 messages each, 10 participants posted 3 messages each, and 6 participants posted 4 messages each.

A total of 132 messages were posted during the post-test with a mean of 4.4 messages. Analysis showed that participants had posted multiple numbers of messages with a range of 1 to 6 messages per participant. At an individual level; 1 participant posted 1 message each, 3 participants posted 2 messages each, 5 participants posted 3 messages each, 2 participants posted 4 messages each, 12 participants posted 5 messages each, and 7 participants posted 6 messages each.

Table 4 shows the frequency and percentage of critical thinking classifications that were observed in the pre-test–post-test messages posted by the 30 participants. Percentages were calculated by dividing the total number of postings showing a given classification of critical thinking by the total number of messages posted by the participants. Triggering events was the predominant category, with between 36% and 56% of all participant messages. Resolution was the least frequently observed category of critical thinking, with between 6.6% and 25.6% of messages addressing resolution phase. Overall observation was that there was a significant increase in the post-test levels of critical thinking.

Table 4

Pre-test and Post-test; Frequency and percentage

of critical thinking classifications

| Pre-test | Post-test | ||

f | % | f | % | |

Triggering Events | 28 | 36.8 | 74 | 56.1 |

Exploration | 23 | 30.1 | 73 | 55.3 |

Integration | 9 | 11.8 | 41 | 31.1 |

Resolution | 5 | 6.6 | 34 | 25.6 |

Total student messages | 76 | 132 | ||

The inter-rater reliability for pre-test transcript was k = .81; for post-test transcript, inter-rater reliability was k= .80. Capozzoli et al. (1999), that values greater than 0.75 or so may be taken to represent excellent agreement beyond chance.

Pre-test–post-test teaching presence and critical thinking

Table 5 illustrates the comparison of pre-test–post-test instructor’s total teaching presence with percentage of critical thinking classifications and mean of the percentage critical thinking classifications. The mean of percentage critical thinking classifications was calculated by dividing the total sum of percentage critical thinking classifications by 4 (total number of critical thinking categories). The analysis demonstrates that as the teaching presence increased there were significant increases in all the levels of critical thinking. By comparing the pre-test–post-test total teaching presence with the pre-test–post-test mean of critical thinking classification it was revealed that as the post-test total teaching presence increased by a factor of 2.7. Consequently, the mean of critical thinking classification increased by a factor of 2.

Table 5

Pre-test and Post-test; Total teaching presence

and percentage of critical thinking classifications

| Total Teaching Presence | Triggering | Exploration | Integration | Resolution |

|

Pre-test | 123 | 36.8% | 30.1% | 11.8% | 6.6% | 21.3 |

Post-test | 328 | 56.1% | 55.3% | 31.1% | 25.6% | 42.0 |

Perception of asynchronous discussion forum

All 30 participants responded to the 5 item open ended questionnaire. A variety of interesting comments were given by the participants. It was observed that it took approximately 3 weeks for all the participants to complete the questionnaire. Perhaps these questions required long answers. Three sample responses are included for each question to demonstrate students’ perception towards online discussion forum.

What is your motivation for participating in the forums?

The majority of participants indicated that their motivation to participate in the discussion forum was that they wanted to score good marks. This agrees with Rovai’s (2007) findings about using grading strategies to increase students’ extrinsic motivation. Another prominent comment was that participants enjoyed positive arguments with other participants in the course.

Sample 1

I am assessed so it is important that I participate in every topic's discussion. The questions also help me to think critically, thereby improving my ability to think and analyze questions and situations.

Sample 2

Participation, articulating my thoughts and then evaluating them for precision and relevancy against other forum user’s thoughts of particular topics. And the grading of course

Sample 3

Dialogue with others in the course and colleagues regarding questions from coordinator, assignments and study tasks. Forums are useful, especially when there is a heated debate between students.

What can be done to improve your motivation?

The common responses to this question was that participants wanted their discussion forum messages to be graded as soon as the forum closed rather than getting their marks at the end of the semester. Moreover, there was a huge demand for greater participation from the instructor. This indicates that instructors can by far and large motivate students to participate in discussion forums through active participation.

Sample 1

We should be marked weekly and told of our marks so that we know how we have performed. By seeing that other people are scoring better than us, it would motivate us to work harder and contribute more towards the discussion. If we are doing well, it will encourage us to do better each time.

Sample 2

With more participation from the Online Tutor or Coordinator, to guide my answers if they are correct or incorrect. There are many views shared on the discussion forum but we really need guidance as to what is the right answer.

Sample 3

More feedbacks from course coordinators when it is clear that my discussions are not in accordance or in line with the topics so that I can learn from my mistakes

How do you feel about been accessed for your participation in the discussion forum?

All the participants were satisfied that their discussion messages were graded. This contradicts, Warren (2008) report that some students rebel against graded discussion as they believe that graded discussion impinges on free and open participation in the discussion. In fact in this study majority participants indicated that since the discussion forum messages are graded they make sure that they provide valid and logical arguments and in doing so they get to read and understand their notes. They indicated that most of the time they only open their books in order to complete their assignments or to prepare for examinations.

Sample 1

I am happy because it is not so hard and we would get marks. It is like doing a mini assignment every week, which is better than doing lengthy and hard assignments or tests.

Sample 2

I feel that I may not get good mark if I do not put logical argument on the topic discuss.

Sample 3

I think it more appropriate for accessing my participation in the discussion forum for me to know, for other students to comment on my views and for me to see from their perspective on the discussion forum.

What do you think about the instructor’s participation in the discussion forum?

Bulk of the participants commented that instructor’s participation in the discussion forum was at an acceptable level, but a minority felt that more active participation was required. Most participants suggested that instructor presence is important as it clears misunderstanding and gives them confidence that they are on the right track. This confirms Kay’s (2006) argument that instructor presence is required to correct misconceptions that spring up early on in the learning process.

Sample 1

Well done. My tutor contributes and encourages other students to discuss. She starts healthy discussions for us to continue.

Sample 2

Excellent. I look forward to those comments, because I then know that either I am on track or way off the mark.

Sample 3

I think the tutor’s participation is very important for students. Through their guidance, we get to know if we are on the right track or not. The higher the number of contributions by the tutor, the better for the students.

How do you feel when the instructor comments on your forum post?

All the 30 participants gave positive comments for this question. For example they commented that they felt; happy, motivated, great, special, and proud.

Sample 1

I feel motivated and see that there is more to contribute. Helps me to improve in my discussions.

Sample 2

Personally I am happy because in doing so, the tutor help put me back on track. I learn more this way.

Sample 3

I feel great that I get feedback and know whether I am wrong or right.

Discussion

The results revealed that as the teaching presence increased there were significant increases in all the levels of critical thinking (see Table 5). This is consistent with, McLoughlin and Luca’s (1999) findings that when an instructor interfered in the discussion, he was able to guide students into higher level of critical thinking. The coders understood the concept of Community of Inquiry and the Practical Inquiry models in a very short time (within a week). They found both the Anderson et al. (2001) teaching presence template and the Garrison et al. (2001) cognitive presence coding template enabled them to easily categorize the indicators. The Practical Inquiry model was used successfully to categorize indicators for critical thinking in this study.

It was also observed that, most of the time, participants posted their messages at night. This indicates that discussion occurs regardless of time and place in online asynchronous discussion forums. Furthermore, evidence from messages posted in post-test, showed that student participation increased dramatically. In the post-test the total student messages was 132 compared to 76 pre-test messages. This contradicts Grandon’s (2006) report that active student participation only occurs when the discussion task is graded.

In this study, both the pre-test–post-test discussion tasks were graded and results showed high student participation can be achieved through sufficient teaching presence.

Another issue is whether the type of discussion question is related to higher levels of critical thinking. In this study, pre-test and post-test discussion questions were carefully framed so that both questions were of equal appetency to stimulate critical thinking. For example:

Pre-test question: Assent of the head of state has no real significance in the legislative process and the enactment process of parliament should be sufficient.

Do you agree? Why/why not?

Post- test question: Parliament should not have the power to delegate its functions to other persons/bodies (subsidiary legislation) as it only encourage corruption and takes the power away from those who are elected to represent the people.

Do you agree? Why/why not?

Consensus from prominent researchers in the field of critical thinking was gathered to ensure that both these questions could stimulate critical thinking. Professor Terry Anderson, Dr. Martha Cleveland-Innes, Dr. Elizabeth Murphy, and Professor Curtis Bonk confirmed that both questions were of higher order that has the potential to stimulate critical thinking. For example, T. Anderson (personal communication, June 10, 2009) said that

“I think your questions are great and likely to stimulate critical thinking. They have elements of interest and conflict - triggering potential. Certainly offer lots of opportunity for exploration in multiple media and contexts. They also stimulate integration as many diverse threads may need to be brought together in the form that allows resolution and application. So I think these are theoretically sound means to develop critical thinking as per Garrisons, Candy's and other models of critical thinking.”

Since both question had the ability to stimulate critical thinking, low frequency in all categories of critical thinking was observed during the pre-test and high frequency in all 4 categories of critical thinking was observed during the post-test. The only difference between pre-test and post-test was that the teaching presence was increased. Judging from this it is clear that teaching presence in a major determinate in moving students to achieve higher levels of critical thinking in asynchronous discussion forms.

To summarize, it was found that during both pre-test–post-test levels of critical thinking, triggering events and exploration had high frequency. The first phase of Practical Inquiry model, triggering events, had the highest frequency 36.8% in the pre-test and 56.1% in the post-test. This would seem to be reasonable, considering the discussion question was well framed and had elements of interest and conflict. The second phase, exploration, had the second highest frequency 30.1% in the pre-test and 55.3% in the post-test. This is also not surprising as the discussion question offered lots of opportunity for exploration in multiple media and contexts and it is consistent with previous research. However, the frequency of the responses dropped rapidly in the integration and resolution phases. Frequency in integration phase was 11.8% in the pre-test and 31.1% in the post-test and frequency in resolution phase was 6.6% in the pre-test and 25.6% in the post-test. Though, the questions had the capability to stimulate these phases, however lower frequency was observed. Perhaps because there was a need to bring many diverse threads together in the form that would allow resolution and application (T. Anderson, personal communication, June 10, 2009).

Conclusion

The central hypothesis of this study was that if critical thinking is related to teaching presence, then exposing learners participating in the asynchronous discussion forums to high teaching presence will result in high level of critical thinking. In this study it was evident that when teaching presence was increased there was a significant increase in learners’ level of critical thinking. Therefore, it is concluded that through increasing teaching presence students participating in asynchronous discussion forums can reach higher level of critical thinking.

It was also evident that learners were able to reflect their thoughts better with the guidance from the instructor. Therefore, instructors should send prompt replies to learners’ to guide them to post high quality messages. This can be a motivating factor for students as it will give them the impression that the instructor checks each of their messages in detail. Moreover, discussion should include other features such as images, videos, animation rather than plain text. This will help students communicate their ideas easily and creatively. This is supported by Walker (2006) who found that integrating various learning styles in online discussion board encourage critical thinking.

This study in one way also tested the Community of Inquiry model for its robustness. The Community of Inquiry model can be used as a framework for future research in a quest to better understand the relationship between teaching presence and learners’ level of critical thinking when using message as the unit of measurement for the computer mediated communication transcripts. In addition, in this study the Practical Inquiry model has proven successful at categorizing indicators for critical thinking, researchers should consider integrating this content analysis models with existing Learning Management Systems such as Moodle, and Blackboard because these applications already have rating systems.

The critical thinking achievement levels indicated that learners generally scored lower on integration and resolution category. According to T. Anderson (personal communication, June 10, 2009) there is a need to bring many diverse threads together in the form that would allow resolution and application. Probably one would ask how would this transfer to the practicality of moving critical thinking to higher level in online asynchronous discussion forums, since online learning typically lasts between 7-14 days each, hardly enough time to bring diverse threads together. Richardson and Ice (2009, p. 21) suggested that “instructors should be looking to online discussions as a gauge, evidence of where students’ critical thinking levels are at a particular point in time, and then help them achieve the next level through additional scaffolding”.

While this paper provides promising evidence that through high teaching presence high levels of critical thinking can be achieved, there are limitations to this research. The major limitation of this research was that all data were gathered from undergraduate students at a single institution. Lulee (2008) explained that “every online discussion has its own unique context; the researchers often had to assess a wider range of subjects to infer meanings that presented the actual status”. Therefore, more experimental studies are needed to code multiple courses simultaneously to test the real effect of teaching presence on learners’ level of critical thinking.

Future studies may also examine the effects of teaching presence taking into consideration learners’ prior knowledge and understanding of the critical thinking concept, so that the question whether increased teaching presence does indeed directly increase learners’ level of critical thinking in asynchronous discussion forums can be answered.

References

Anderson, T., Rourke, L., Garrison, D.R, & Archer, W. (2001). Assessing Teaching Presence in a Computer Conferencing Context. Journal of Asynchronous Learning Networks, 5(2), 1-17.

Arbaugh, J. B., & Hwang, A. (2006). Does "teaching presence" exist in online MBA courses? The Internet and Higher Education, 9(1), 9-21.

Arend, B. (2009, January). Encouraging critical thinking in online threaded discussions. The Journal of Educators Online, 6 (1), 1-23.

Akyol, Z., & Garrison, D.R. (2008). The development of a community of inquiry over time in an online course: Understanding the progression and integration of social, cognitive and teaching presence. Journal of Asynchronous Networks, 12(3), 3-22.

Bangert, A. W. (2009). Building a validity argument for the community of inquiry survey instrument. The Internet and Higher Education, 12 (2), 104-111.

Bhattacharaya, M. (1999). A study of asynchronous and synchronous discussion on cognitive maps in a distributed learning environment. Proceedings of the WebNet 99 world Conference on the World Wide Web and Internet, Honolulu, HI.

Berelson, B. (1952). Content analysis in communication research. Illinois: Free Press.

Berge, Z. (2002). Active, interactive, and reflective e-learning. Quarterly Review of Distance Education, 3(2), 181-190.

Beyer, B.K. (1985). Teaching critical thinking skills: A direct approach. Social Education, 49(4), 297-303.

Bullen, M. (1998). Participation and critical thinking in online university distance education. Journal of Distance Education, 13 (2), 1-32.

Buraphadeja, V., & Dawson, K. (2008). Content analysis in computer-mediated communication: Analyzing models for assessing critical thinking through the lens of social constructivism. American Journal of Distance Education, 22(3), 130-145.

Capozzoli, M., McSweeney, L., & Sina, D. (1999). Beyond kappa: A review of interrater agreement measures. The Canadian Journal of Statistics, 27(1), 3-23.

Chen, G., & Chiu, M.M. (2008). Online discussion processes: Effects of earlier messages’ evaluations, knowledge content, social cues and personal information on later messages. Computers and Education, 50(2), 678-692.

Cheong, C. M., & Cheung, W. S. (2008) Online discussion and critical thinking skills: A case study in a Singapore secondary school. Australasian Journal of Educational Technology. 24(5), 556-573.

Chickering, A.W., & Ehrmann, S.C. (1996). Implementing the seven principles: Technology as lever. AAHE Bulletin, 49(2), 3-6.

Corich, S. (2009). Using an automated tool to measure evidence of critical thinking of individuals in discussion forums. In S. Mann & M. Verhaart (Eds.), Proceedings of the 22nd Annual Conference of the National Advisory Committee on Computing Qualifications (pp. 15-21). Napier, New Zealand: NACCQ Research and Support Working Group. Retrieved August 22, 2009 http://hyperdisc.unitec.ac.nz/naccq09/proceedings/pdfs/15-21.pdf

Dewey, J. (1933). How we think. A restatement of the Relation of Reflective Thinking to the Educative Process. Massachusetts: D.C. Heath and Company.

Duron, R., Limbach, L., & Waugh., W. (2006). Critical Thinking Framework for Any Discipline. International Journal of Teaching and Learning in Higher Education, 17 (2), 160-166.

Ellis, R.A., & Calvo, R.A (2006). Discontinuities in university student experiences of learning through discussions. British Journal of Educational Technology, 37 (1), 55-68.

Ennis, R. H. (1992). The degree to which critical thinking is subject specific: clarification and needed research. In S. P. Norris (Ed.), The generalizability of critical thinking: Multiple perspectives on an educational ideal (pp. 21-37). New York: Teachers College.

Facione, P.A. (1984). Toward a theory of critical thinking. Liberal Education, 70 (3), 253-261.

Fritz, P.A., & Weaver, R.L. (1986). Teaching critical thinking skills in the basic speaking course: A liberal arts perspective. Communication Education, 35 (2), 174-182.

Gagne, E.D., Yekovich, C.W., & Yekovich, F.R. (1997). The cognitive psychology of school learning (2nd ed.). New York: HarperCollins.

Garrison, D. R., Anderson, T., & Archer, W. (2001). Critical thinking, cognitive presence, and computer conferencing in distance education. The American Journal of Distance Education, 15(1), 7-23.

Garrison, D. R., Anderson, T., & Archer, W. (2000). Critical thinking in a text-based environment: Computer conferencing in higher education. The Internet and Higher Education, 2 (2–3), 87-105.

Garrison, D. R. (2007). Online community of inquiry review: Social, cognitive, and teaching presence issues. Journal of Asynchronous Learning Networks, 11(1), 61-72.

Garrison, D.R. (1993). Quality and theory in distance education: Theoretical considerations. In D, Keegan (Eds.), Theoretical principles of distance education. New York: Routledge.

Garrison, D. R., & Arbaugh, J. B. (2007). Researching the community of inquiry framework: Review, issues, and future directions. The Internet and Higher Education, 10(8), 157-172.

Gokhale, A. 1995. Collaborative learning enhances critical thinking. Journal of Technology Education, 7(1), 22–30.

Goodell, J., & Yusko, B. (2005). Overcoming barriers to student participation in online discussions. Contemporary Issues in Technology and Teacher Education, 5(1), 77-92.

Grandon, G. (2006) Asynchronous Discussion Groups: A Use-based Taxonomy with Examples. Journal of Information Systems Education, 17(4), 373-383.

Hanson, S. (2003). Legal Method and Reasoning. Retrieved February 26, 2009 from http://books.google.com.fj/books?id=I-Lnlrun9KsC

Hara, N., Bonk, C. J., & Angeli, C. (2000). Content analysis of online discussion in an applied educational psychology course. Instructional Science, 28(2), 115-152.

Harasim, L. (1989). On-line education as a new domain. In R.D. Manson & A.R. Kay (Eds.), Mindweave: Communication, Computers and Distance Education. Oxford: Pergamon Press, 1989.

Hew, K. F. & Cheung, W. S. (2003). An exploratory study on the use of asynchronous online discussion in hypermedia design. Journal of Instructional Science& Technology, 6 (1). Retrieved January 3, 2009, from:

http://www.usq.edu.au/electpub/e-jist/docs/Vol6_No1/an_exploratory_study_on_the_use_.htm

Jonassen, D. H. (1994). Technology as cognitive tools: Learners as designers. Retrieved March 24, 2009 from http://itech1.coe.uga.edu/itforum/paper1/paper1.html

Kanuka, H., & Anderson, T. (1998). On-line interchange, discord, and knowledge construction. Journal of Distance Education, 13 (1), 57-74.

Kay, R. (2006). Using asynchronous online discussion to learn introductory programming: An exploratory analysis. Canadian Journal of Learning and Technology, 32(1). Retrieved February 22, 2009 from: http://www.cjlt.ca/content/vol32.1/kay.html

Kennedy, M, Fisher, M.B. & Ennis, R.H. (1991). Critical thinking: Literature review and needed research. In L. Idol and B.F. Jones (Eds.), Educational values and cognitive instruction: Implications for reform. Hillsdale, NJ: Erlbaum.

Knowlton, D. (2005). A Taxonomy of Learning through Asynchronous Discussion. Journal of Interactive Learning Research, 16(2), 155-177.

Krippendorff, K. (1980). Content Analysis: An Introduction to Its Methodology. Newbury Park, CA: Sage.

Lock, J. V., & Redmond, P. (2006). International Online Collaboration: Modeling Online Learning and Teaching. Journal of Online Learning and Teaching, 2(4), 233-247.

Lewinson, J. (2005). Asynchronous discussion forums in the changing landscape of the online learning environment. Retrieved March 3, 2009, from http://www.emeraldinsight.com/Insight/ViewContentServlet;jsessionid=B8D19A2F38AAE8E33120CD6BEA68B260?Filename=Published/EmeraldFullTextArticle/Pdf/1650220305.pdf

Li, Q. (2003).Would we teach without technology? A professor’s experience of teaching mathematics education incorporating the internet. Educational Research, 45(1), 61-77.

Lulee, S. (2008). Assessing teaching presence in instructional computer Conferences. Retrieved March 21, 2009 from http://www.educause.edu/wiki/Assessing+Teaching+Presence+in+Instructional+Computer+Conferences

Maurino, P.S.M. (2007). Looking for Critical Thinking in Online Threaded Discussions. Retrieved January 3, 2009, from:

http://www.usq.edu.au/electpub/e-jist/docs/vol9_no2/papers/full_papers/maurino.pdf

MacKnight, C.B. (2000). Teaching critical thinking through online discussions. EDUCAUSE Quarterly, 23(4), 38-41.

Mazzolini, M., & Maddison, S. (2003). Sage, guide or ghost? The effort of instructor intervention on student participation in online discussions forums. Computers in Education, 40, 237-253.

Meyer, K. (2004). Evaluating online discussions: Four difference frames of analysis. Journal of Asynchronous Learning Networks, 8(2), 101-114.

Meyer, K. (2003). Face-to-face versus threaded discussions: The role of time and higher-order thinking. Journal of Asynchronous Learning Networks. 7(3), 55-65.

McKeachie, W. J., Pintrich, P. R., Lin, Y. G., & Smith, D. A. F. (1986). Teaching and learning in the college classroom: A review of the research literature. Retrieved April 16, 2009 from http://www.eric.ed.gov/ERICDocs/data/ericdocs2sql/content_storage_01/0000019b/80/21/88/43.pdf

McLoughlin, C., & Luca, J. (1999). Lonely outpourings or reasoned dialogue? An analysis of text based conferencing as a tool to support learning. Proceedings of the 16th Annual Conference of the Australian Society for Computers in Learning Tertiary Education (ASCILITE’99), Brisbane, Australia, 217-228. Retrieved March 21, 2009 from http://www.ascilite.org.au/conferences/brisbane99/papers/mcloughlinluca.pdf

Moore, T. (2004). The critical thinking debate: how general are the general thinking skills? Journal of the Higher Education Research and Development Society of Australasia, 23(1), 3-18.

Murphy, E. (2004). An instrument to support thinking critically about critical thinking in online asynchronous discussions. Australasian Journal of Educational Technology, 20(3), 295-315.

Oriogun, P. K., Ravenscroft, A, & Cook, J. (2005). Validating an Approach of Examining Cognitive Engagement within Online Groups. American Journal of Distance Education, 19(4), 197-214.

Ou, C., LeDoux, T., & Crooks, S. (2004). The Effects of Instructor Presence on Critical Thinking in Asynchronous Online Discussion. In R. Ferdig et al. (Eds.), Proceedings of Society for Information Technology and Teacher Education International Conference 2004 (pp. 2989-2993). Chesapeake, VA: AACE.

Özmen, K. S. (2008). Current State of Understanding of Critical Thinking in Higher Education. Retrieved April 24, 2009 from

http://www.gefad.gazi.edu.tr/window/dosyapdf/2008/2/2008-2-109-127-7.pdf

Paul, R. (2004). The state of critical thinking today. Retrieved February 16, 2009 from http://www.criticalthinking.org/professionaldev/the-state-ct-today.cfm

Perkins, C., & Murphy, E. (2006). Identifying and measuring individual engagement in

critical thinking in online discussions: An exploratory study [Electronic version]. Educational Technology & Society, 9(1), 298-307.

Pisutova-Gerber, K. & Malovicova, J. (2009). Critical and higher order thinking in online threaded discussion in the Solvak context. International Review of Research in Open and Distance Learning, 10(1). Retrieved March 21, 2009 from http://www.irrodl.org/index.php/irrodl/article/view/589/1175

Rourke, L., Anderson, T., Garrison, D.R, & Archer, W. (1999). Assessing social presence in asynchronous, text-based computer conferencing. Journal of Distance Education, 14(3), 51-70.

Rourke, L., Anderson, T., Garrison, D.R, & Archer, W. (2001). Methodological Issues in the Content Analysis of Computer Conference Transcripts. International Journal of Artifical Intelligence in Education, 12, 8-22.

Rovai, A. P. (2007). Facilitating online discussions effectively. The Internet and Higher Education, 10(1), 77-88.

Rovai, A.P. (2000). Online and traditional assessments: what is the difference? The Internet and Higher Education, 3(3), 141-151.

Rudin, B. (1984). Teaching critical thinking skills. Social Education, 49 (4), 279-281.

Schafersman, S. D. (1991). An introduction to critical thinking. Retrieved March 21, 2009 from http://www.freeinquiry.com/critical-thinking.html

Scriven, M., & Paul, R. (2007). The critical thinking community. Retrieved January 4, 2009 from: http://www.criticalthinking.org/aboutCT/definingCT.cfm

Shea, P., Pickett, A., & Pelz, W. A follow-up investigation of teaching presence in the SUNY Learning Network. Journal of Asynchronous Learning Networks, 7(2), 61-80.

Shea, P., & Bidjerano, T. (2009). Community of inquiry as a theoretical framework to foster “epistemic engagement” and “cognitive presence” in online education. Computers and Education, 52(3), 543-553.

Swan, K. (2005). A constructivist model for thinking about learning online. In J. Bourne & J.C. Moore (Eds.), Elements of Quality Online Education: Engaging Communities. Needham, MA: Sloan-C. Retrieved March 24, 2009 from http://www.kent.edu/rcet/Publications/upload/constructivist%20theory.pdf

Swan, K., Shea, P., Richardson, J., Ice, P., Garrison, D. R., Cleveland-Innes, M., & Arbaugh, J. B. (2008). Validating a measurement tool of presence in online communities of inquiry. E-Mentor, 2(24), 1-12.

Tice, E. T. (1999). What is critical thinking? Journal of Excellence in Higher Education. Retrieved March 1, 2009, from http://faculty.uophx.edu/Joehe/Past/thinking.htm

Walker, G. (2006). Critical thinking in asynchronous discussions. International Journal of Instructional Technology and Distance Learning, 2(6), 15-22.

Warren, C. M. J. (2008). The use of online asynchronous discussion forums in the development of deep learning among postgraduate real estate students. Retrieved July 21, 2009 from http://espace.library.uq.edu.au/eserv/UQ:130878/n768_Bear_Discussion.pdf

Warschauer, M. (1996). Comparing face-to-face and electronic discussion in the second language classroom. Computer Assisted Language Instruction Consortium (CALICO) Journal, 13(2), 7-26.

Whittle, J, Morgan, M., & Maltby, J. (2000). Higher Learning Online: Using Constructivist Principles to Design Effective Asynchronous Discussion. Paper presented at the North American Web Conference, Canada. Retrieved February 26, 2009 from http://www.unb.ca/naweb/2k/papers/whittle.htm

Wiersma, W. & Jurs, S. G. (2005). Research methods in education: an introduction (8th ed.) Boston: Pearson Education.

About the Author

Deepak Prasad is Educational Technologist, Centre for Flexible and Distance Learning, University of South Pacific, Fiji Islands. He is a BTech in Electrical and Electronics Engineering, BA in Education, a Master in Education Technology, and Diploma in Tertiary Teaching. His research interests are ICT and its applications in open and distance learning.

Email: deepak.v.prasad@gmail.com